Query Scheduling and Data Loading with ByteHouse and Airflow

An introduction to Apache Airflow and how to connect it with ByteHouse CLI

Apache Airflow is an open-source workflow management tool for data engineering pipelines. It was created at Airbnb in 2014 to manage the company's growing number of complicated procedures. Airbnb could programmatically author and schedule their processes and monitor them using the built-in Airflow user interface when they created Airflow.

Airflow has always been open source, and in March 2016, it was designated as an Apache Incubator project. In January 2019, it became a Top-Level Apache Software Foundation project.

Python is used to write Airflow, and Python scripts are used to generate workflows. The notion of "configuration as code" is used to design Airflow. While alternative "configuration as code" workflow systems use markup languages such as XML, Python allows developers to import libraries and classes to aid in creating their workflows.

Airflow orchestrates workflows using directed acyclic graphs (DAGs). Python is used to specify tasks and dependencies, while Airflow is used to schedule and execute them. DAGs can be executed regularly (e.g., hourly or daily) or in response to external event triggers (e.g., a file appearing in Hive). To generate a DAG, previous DAG-based schedulers like Oozie and Azkaban relied on many configuration files and file system trees, but DAGs in Airflow may typically be built in only one Python file.

We'll quickly illustrate how you can connect Apache Airflow with ByteHouse CLI to schedule your query and data loading.

Prerequisites

Assuming you have completed the following steps:

Registered a ByteHouse account (start with a FREE account)

Installed ByteHouse CLI in your virtual/local environment and logged in with your own ByteHouse account.

Installed Airflow

Initialised the webserver of Airflow

Configured YAML

Refer this guide to complete these steps.

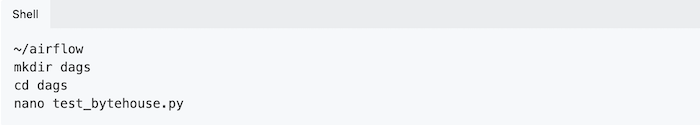

Create a DAG job

Create a folder named 'dags' in the path of Airflow, then create test_bytehouse.py to start a new DAG job.

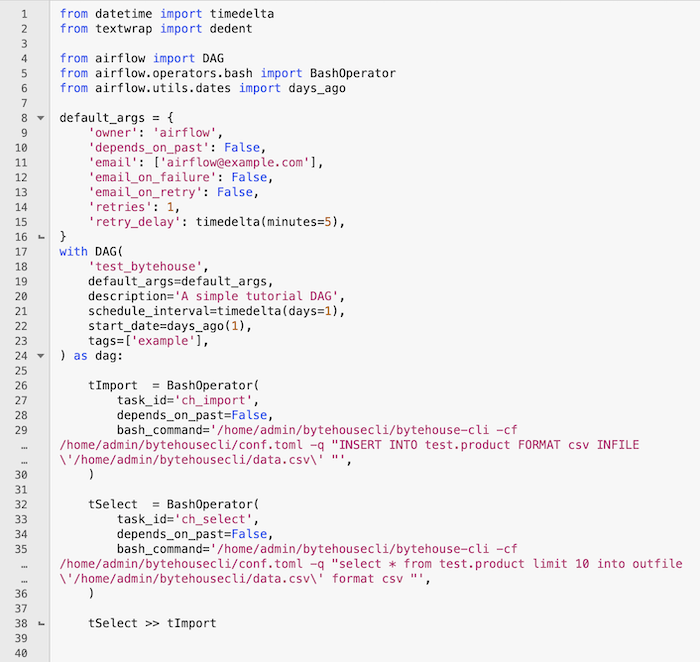

Add the following codes in test_bytehouse.py (copy the code from this page); the job can connect to ByteHouse CLI and use BashOperator to run tasks to run queries or loading data into ByteHouse.

Run python test_bytehouse.py under the current file path to create the DAG in Airflow.

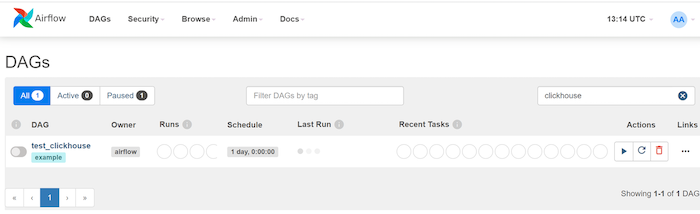

Refresh the web page in the browser, and you can see the newly created DAG named test_bytehouse showing in the DAG list.

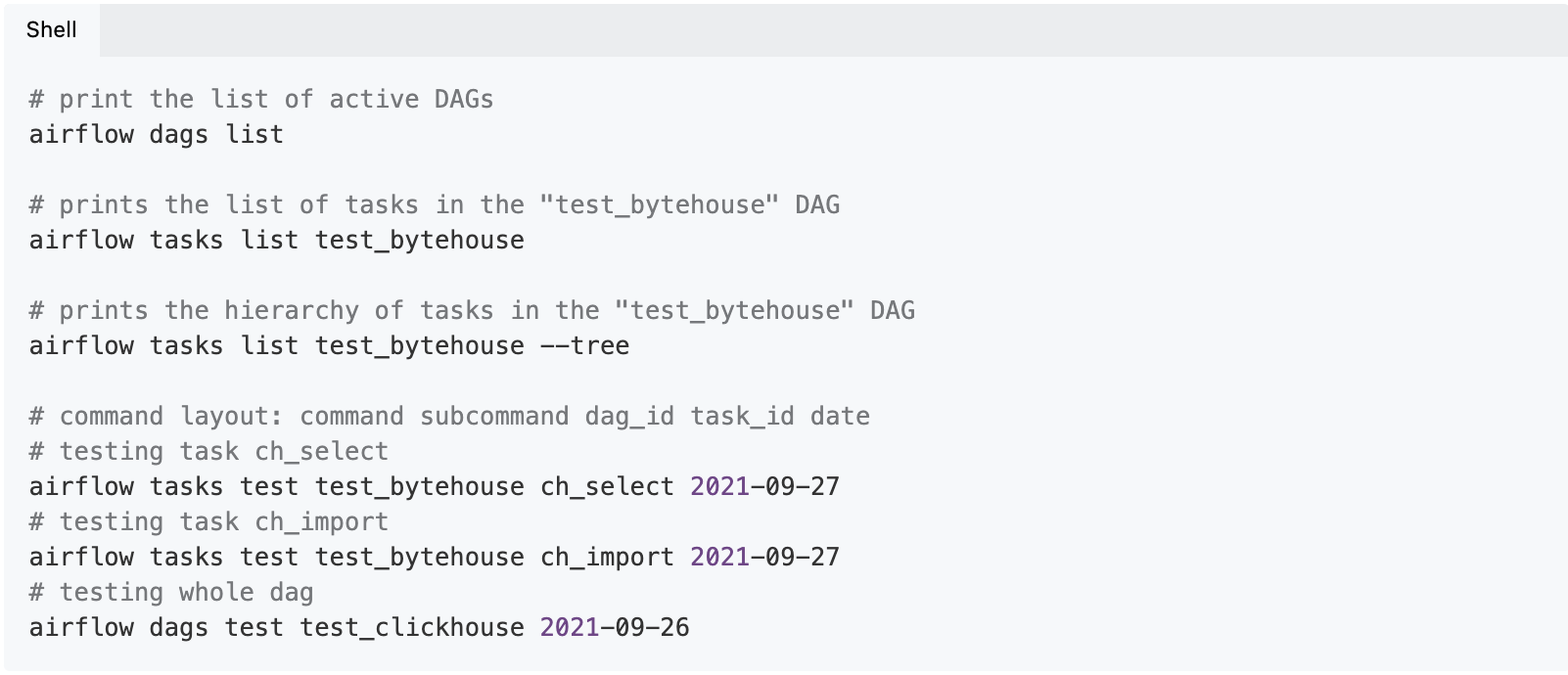

Execute the DAG

Run the following Airflow commands to check the DAG list and test sub-tasks in test_Bytehouse DAG. You can test the query execution and data import tasks separately.

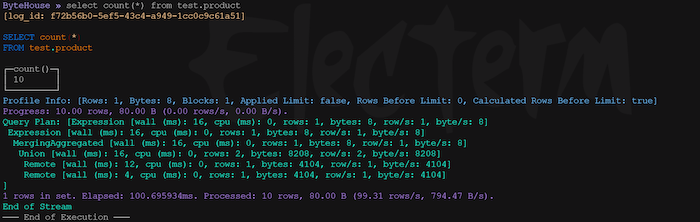

After running the DAG, check out the query history page and database module in your ByteHouse account, and you can see the data is being queried/loaded successfully.

Register for a free trial account here and get US$400 credit.